The Circuits, Architecture, Systems, Technology Lab (CAST Lab) focuses on research and development of modern computer systems based on specialized hardware in the post-Moore’s law era. Members of CAST Lab conduct research in various fields of hardware design such as computer architecture, VLSI, FPGA, hardware/software co-design, and processing-in-memory with a holistic design approach to improve overall system performance. The group’s current mission is to build a high-performance and scalable computing platform for future AI applications.

AI Accelerators: Design Next Generation AI Hardware Platform

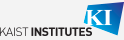

Machine learning (ML), the study of algorithms that enable artificial intelligence (AI), has become the prominent computing paradigm as it revolutionizes how computers handle cognitive tasks based on a massive amount of observed data. With more industries adopting this technology, we face growing demand for supporting hardware that achieves high-performance and energy-efficient processing for the required computations.

In AI accelerator research, the CAST Lab provides design space exploration (DSE) of highly scalable heterogeneous architecture and various system solutions with a SW/HW co-design approach for next generation AI/ML scenarios by building a simulation framework for these solutions and implementing them on physical devices (i.e., FPGA and ASIC) for development, debugging, and deployment of AI accelerators.

MULTI-FPGA SYSTEMS: Build Server Infrastructure for Datacenters

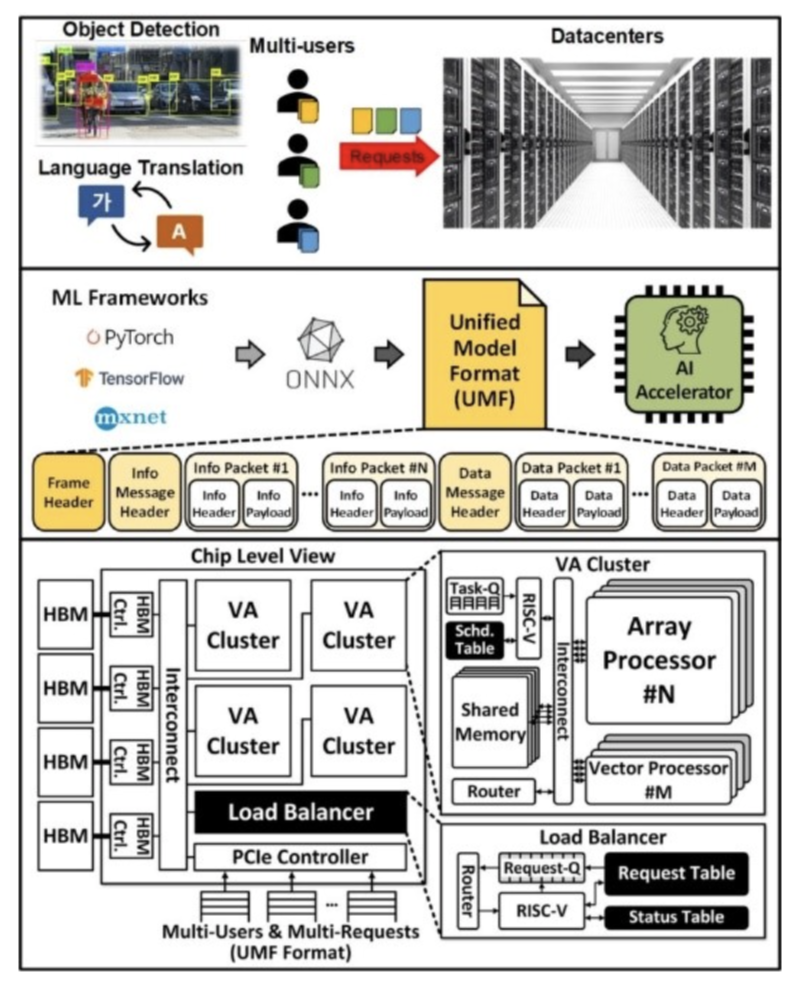

Cloud computing is rapidly changing how enterprises run their services by offering a virtualized computing infrastructure over the internet. Datacenters are the powerhouses behind cloud computing, physically hosting millions of computer servers, communication cables, and data storage. Recently, as the number of services using AI in data centers is increasing, high-efficiency systems that exceed existing systems are needed. To address this challenge, the datacenters are trying to implement multi-FPGA appliances for accelerating services.

In Multi-FPGA research, the group aims to develop a multi-FPGA server infrastructure that not only accelerates datacenter services but also provide solutions for customized system design. The FPGA-based accelerator provides fully reprogrammable hardware to support new operations and larger dimensions of evolving services with minimum cost for redesign when compared to an ASIC-based accelerator. The FPGA-based accelerator also has significantly lower total cost of ownership due to relatively low device and power cost when compared to a GPU-based accelerator.

Processing-in-Memory: Create Computer Systems In Memories

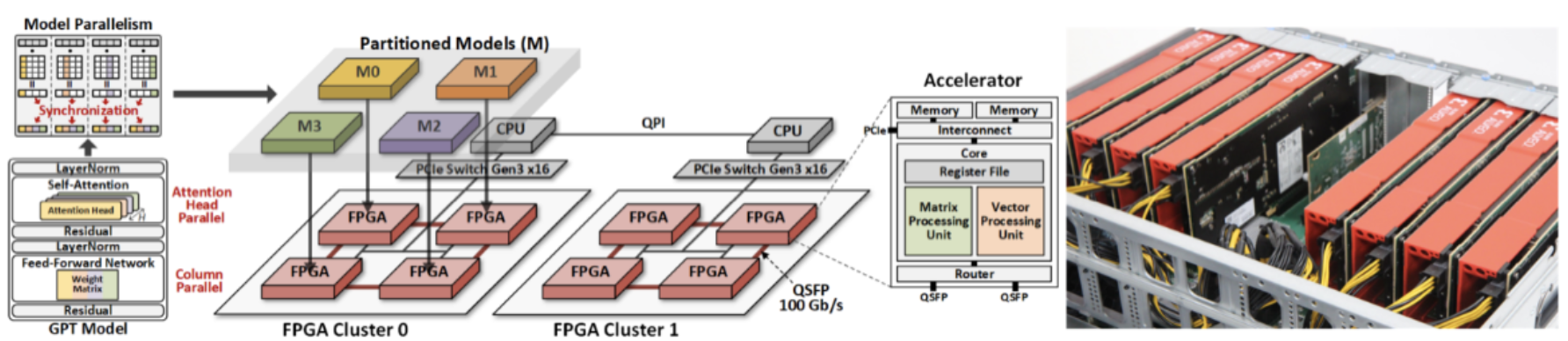

Traditionally, CPU is the center of the computing systems that executes arithmetic and logic calculation, while memory is built around it to simply load and store the data. Today, the computing unit executes operations faster than the memory unit can load and store the required data due to technology scaling. Therefore, the computing unit is no longer the most time-consuming and energy-consuming part of the system, and the cost of moving data to the locations where computations happen has become the bottleneck instead.

The memory-centric model takes an opposite approach to the traditional compute-centric model to address this expensive data movement problem. It adopts processing-in-memory (PIM) to remove redundant data movement. PIM integrates processing engines around/in the memory to perform computations, which allows the data to stay in the memory. The trend of adopting PIM can be seen at multiple levels in the hardware system. The PIM research consists of applying PIM at the main memory and cache levels that use DRAM and SRAM, respectively, as their hardware devices.

Professor Joo-Young Kim

Joo-Young Kim received B.S., M.S., and Ph. D degrees in Electrical Engineering from Korea Advanced Institute of Science and Technology (KAIST), in 2005, 2007, and 2010, respectively. He is currently an Assistant Professor in the School of Electrical Engineering at KAIST. He is also the Director of the AI Semiconductor Systems (AISS) research center. His research interests span various aspects of hardware design including VLSI design, computer architecture, FPGA, domain specific accelerators, hardware/software co-design, and agile hardware development. Before joining KAIST, Joo-Young was a Senior Hardware Engineering Lead at Microsoft Azure working on hardware acceleration for its hyper-scale big data analytics platform named Azure Data Lake. Before that, he was one of the initial members of the Catapult project at Microsoft Research, where he deployed a fabric of FPGAs in datacenters to accelerate critical cloud services such as machine learning, data storage, and networking.

Joo-Young is a recipient of the 2016 IEEE Micro Top Picks Award, the 2014 IEEE Micro Top Picks Award, the 2010 DAC/ISSCC Student Design Contest Award, the 2008 DAC/ISSCC Student Design Contest Award, and the 2006 A-SSCC Student Design Contest Award. He served as Associate Editor for the IEEE Transactions on Circuits and Systems I: Regular Papers (2020-2021).