The KAIST KI-ITC Augmented Reality Research Center (ARRC) was established in September 2016 and is lead by Prof. Woontack Woo. The center is carrying out Augmented Human (AH) research, which can expand the physical, intellectual, and social capabilities of users based on Augmented Reality (AR).

Currently, ARRC is participating in the research of the Situation, Human, Environment, and Natural Interaction (SHE&I) project, which focuses on the development of intelligence interaction technology based on context awareness and human intention understanding. This project aims to analyze the multimodal information such as images, sounds, texts, and IoT sensors with artificial intelligence techniques and to capture the user’s condition in relation to intentions, life patterns, and habits. Therefore, we develop an intelligent interaction technology that performs a customized preemptive interaction by integrating cognitive information.

In this project, ARRC is working on configuring a Holistic Quantified Self (HQS) by constructing a testbed. The HQS is defined as a digital quantified self that collects and quantifies the physical, emotional, social, and behavioral states of a user through multimodal sensors embedded in a smartphone and assorted wearable devices. By considering an HQS pattern, one can analyze the e-personality of the user and support intelligent agents that provide information and services that are appropriate for the user. The intelligent agent represents the user’s position and interacts with the environment and the service provider to provide the necessary information and services to the user in situ and in real time. Through the HQS framework, the intelligent agent can negotiate the environment by representing the user, and agent-based context-aware services are implemented through the testbed in which IoT devices are deployed.

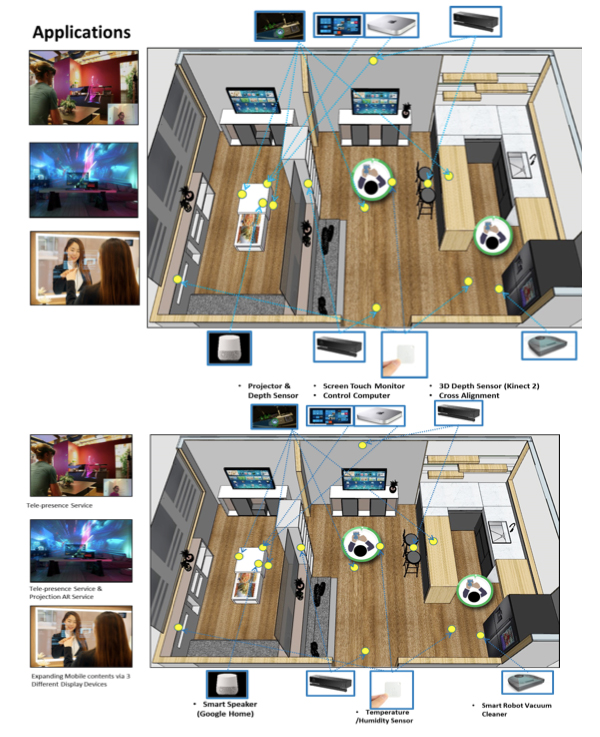

In order to verify the effectiveness of the HQS, ARRC is working on a testbed that include possible features that can be used in smart homes in the next 5 years. The environment consists of 3 different sections as shown in Figure 1: a private room, a living room for multiple users, and a dining area to serve meals. Within the testbed environment, the following three services are currently being developed.

● Tele-presence Service

ARRC is preparing a service scenario in which the user is able to communicate with another person who is far away from the local area. This is a tele-presence service using Augmented Reality Head Mounted Display (AR-HMD) to show a 3D reconstructed and augmented person who is in different space.

● Projection AR Service

ARRC has a plan for projection AR feature as well as tele-presence for the private room and the living room. The team is working to expand the boundaries to show contents not only inside of the physical TV frames but also the outside of the frames.

● Expanding Mobile contents via 3 Different Display Devices

There are 3 different methods of displaying contents such as touch screen monitors, smart mirrors, and projectors. The users with smartphones are able to connect their gears to the monitor in order to share content with others. The monitor will show the users expanded and further information associated with the phones. Smart mirrors and projectors will work similarly to the monitor. However, they can be used with augmented features such as overlaid information on food materials based on camera tracking and projection mapping technologies.

Woo, Woontack (Augmented Reality Research Center)

Homepage: http://ahrc.kaist.ac.kr

E-mail: wwoo@kaist.ac.kr