Prof. Sang Wan Lee’s research group at KAIST has developed a machine learning system that can infer the uncertainty of human knowledge by modeling human decision-making with Bayesian neural networks. The results could improve sampling efficiency and prediction reliability of human-in-the-loop systems for smart healthcare, smart education, and human-computer interactions. The study was presented at AAAI 2021 in February 2021 (paper title: “Human Uncertainty Inference via Deterministic Ensemble Neural Networks,” AAAI 2021 Main track)

All learning systems, including humans or machine learning models, inevitably face uncertainty. Generally, the predictive uncertainty of machine learning algorithms is relatively easily accessible – e.g., by using statistical measures, while it is tricky to interpret their implications. On the contrary, uncertainty in humans is highly interpretable (you can ask them to explain), but its measurement is poorly accessible (you don’t want to bug them with repeated questioning).

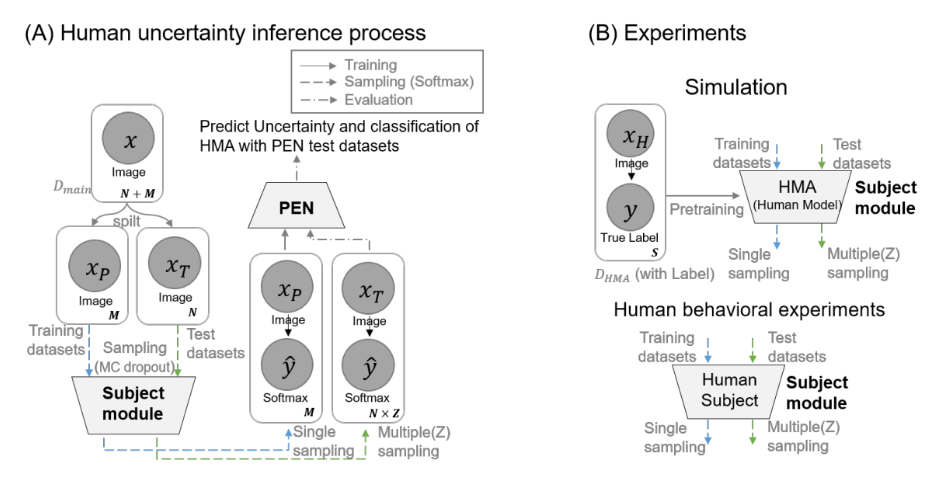

The research team has shown that accessing human uncertainty can be solved through “inference” rather than “measurement”. Humans often respond differently to the same stimulus or question, especially when it is highly uncertain. This trend can also develop in Bayesian neural networks (BNN), in which randomly sampled parameters during inference time lead to output variability. This suggests that under carefully controlled conditions, the human decision-making process can be approximated by the BNNs.

Based on this hypothesis, the research group implemented another deterministic ensemble neural network, called PEN (proxy ensemble networks), that aims to learn the output of a BNN determined by randomly sampled parameters. The PEN’s ensemble structure efficiently captures uncertainty information, making it possible to not only learn the uncertainty range of the original BNN for each data but also infer its range for the new (unseen) data. This suggests that the PEN can learn and infer human uncertainty via BNNs.

By running large-scale human behavioral experiments with 64 physicians, the research group showed that the PEN could predict individual physicians’ choices and associated uncertainty during medical imaging diagnosis.

“The two biggest challenges were to design human behavioral experiments for measuring uncertainty information in realistic situations, and to conduct experiments with physicians,” said the first author of the paper, Yujin Cha, a graduate student in the Department of Bio and Brain Engineering at KAIST who leads this project. He added, “It took an enormous amount of time and effort to collect physicians’ data and train models, and I am happy that it finally paid off.”

“Our results demonstrated the capacity of machine learning to learn the intrinsic nature of human decision making, such uncertainty or confidence. The proposed framework can serve to guide human learning in various applications, including curriculum learning, active learning, recommendation systems, etc.,” said Sang Wan Lee, a senior author and an associate professor of the department of bio and brain engineering at KAIST. This work was supported, in part, by the Daewoong Foundation, the National Research Foundation of Korea, and the Samsung Research Funding Center of Samsung Electronics.

Mr. Yujin Cha, Prof. Sang Wan Lee Department of Bio and Brain Engineering, KAIST

Homepage: https://aibrain.kaist.ac.kr

E-mail: chayj@kaist.ac.kr