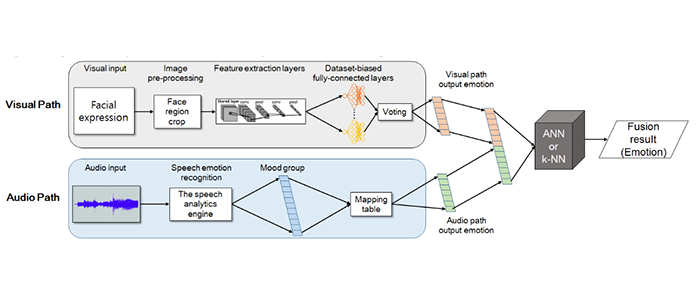

As a research project considering Telepresence robot system development for support of POC service associated with ICT technology, Professor Dong-Soo Kwon’s research team is studying the development of an emotion recognition model for natural interaction between human and robots. Human emotion recognition is an important factor for social robots. In previous research, emotion recognition models with many modalities have been studied, but there are several problems that make accuracies lower when a recognition model is applied to a robot. The research team proposed a decision level fusion method that takes the outputs of each recognition model as input and confirms which combination of features achieves the highest accuracy. For facial expression recognition EdNet, which was developed in KAIST based Convolutional Neural Networks (CNNs), was used as a facial expression recognition model; a speech analytics engine developed was also used for speech emotion recognition.

Finally, the research team confirmed a 43.40% higher accuracy using an artificial neural network (ANN) or the k-Nearest Neighbor (k-NN) algorithm for classification of combinations of features from EdNet and the speech analytics engine. Based on these emotion recognition studies, they are researching emotion recognition models that can be applicable to robots.

Song, Kyuseob KAIST ROBOTICS PROGRAM

Prof. Kwon, Dong Soo Department of Mechanical Engineering, KAIST

E-mail: songks@kaist.ac.kr