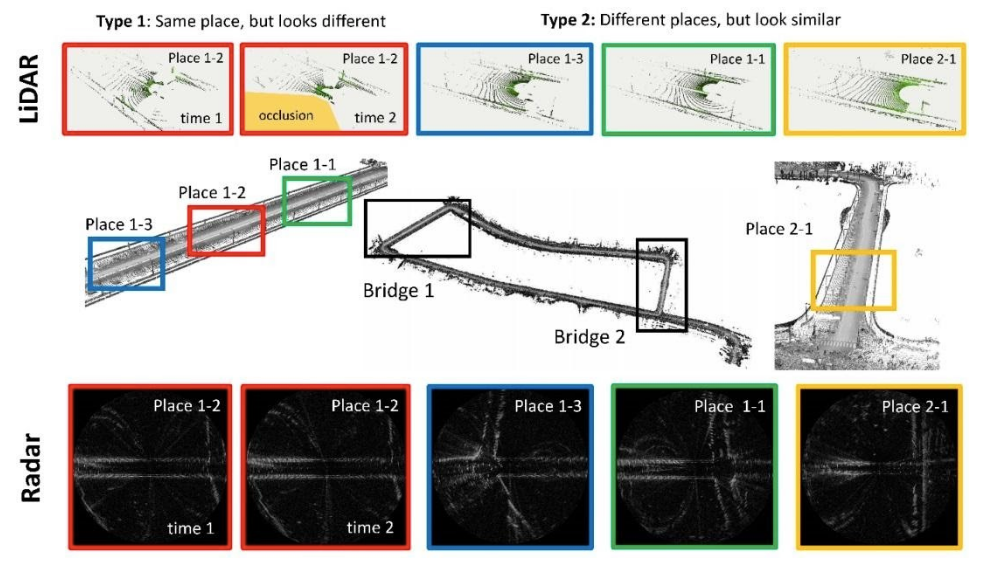

In autonomous driving studies, Light Detection and Ranging (LiDAR)s and cameras are widely adopted for perception during navigation. These two sensors provide rich information for robots to understand the environment by using light. Recent failures in perception of LiDARs and cameras have led to seeking of solutions for tougher environments, to allow reliably perception of the world in all-weather conditions. For example, heavy rain, dust, and direct sunlight often deteriorate light-dominant sensors.

Prof. Ayoung Kim’s group recently introduced works focusing on radar sensors as the main navigational sensor. Radars have been utilized in automotive industries and military applications for a long time. The longer wavelength of the radar has enabled robust sensing even in visibility-degraded conditions. However, the lower resolution and noisy sensor data may prohibit direct application of radar sensors to navigation. Prof. Kim’s group’s work includes a novel solution for these noisy radar sensors to estimate the motion from a sequence of radar data.

The main idea is to separate translational and rotational components during estimation. The nature of the radar measurement comes from spherical coordinates, and converting to Cartesian is computationally expensive. The group focused on the fact that the orientation can be accurately estimated from raw data without coordinate transform. Then, this rotational inference can be exploited in the translational estimation in a different coordinate system. This two-phase estimation allowed the module to estimate both rotation and translation in an effective manner.

By adopting phase correlation in a two-level estimation module, motion estimation from radar yields a robust and accurate motion estimation. The modified phase correlation has allowed system to overcome noise and artifacts caused in sensor data covering a large urban environment. Together with the motion estimation algorithm, Prof. Kim’s group announced a radar dataset to support radar navigation research. Sensor fusion for radar is not widely studied due to the lack of a public dataset. To overcome this limitation and bridge the gap between light-based sensors and radar, the team introduced a multi-modal sensor dataset in public.

Prof. Ayoung Kim Dept. of Civil and Environmental Engineering, KAIST

Homepage: https://irap.kaist.ac.kr

E-mail: ayoungk@kaist.ac.kr