The concept of 360° imaging has recently gained attention in many fields, including autonomous driving vehicles, drones, robots, and AR/VR. In general, raw 360° images are transformed into 2D planar representations while preserving the omnidirectional information, e.g., equirectangular projection (ERP) and cube map projection (CP), to ensure compatibility with imaging pipelines. Omnidirectional images (ODI) are sometimes projected back onto a sphere or transformed with different types of projection and rendered for display in certain applications. However, the angular resolution of a 360° image tends to be lower than that of a narrow field-of-view (FOV) perspective image, as it is captured using a fisheye lens with identical sensor size. Moreover, the 360° image quality can be degraded during a transformation between different image projection types. Therefore, it is imperative to super-resolve the low-resolution (LR) 360° image by considering various projections to provide high-level visual quality under diverse conditions.

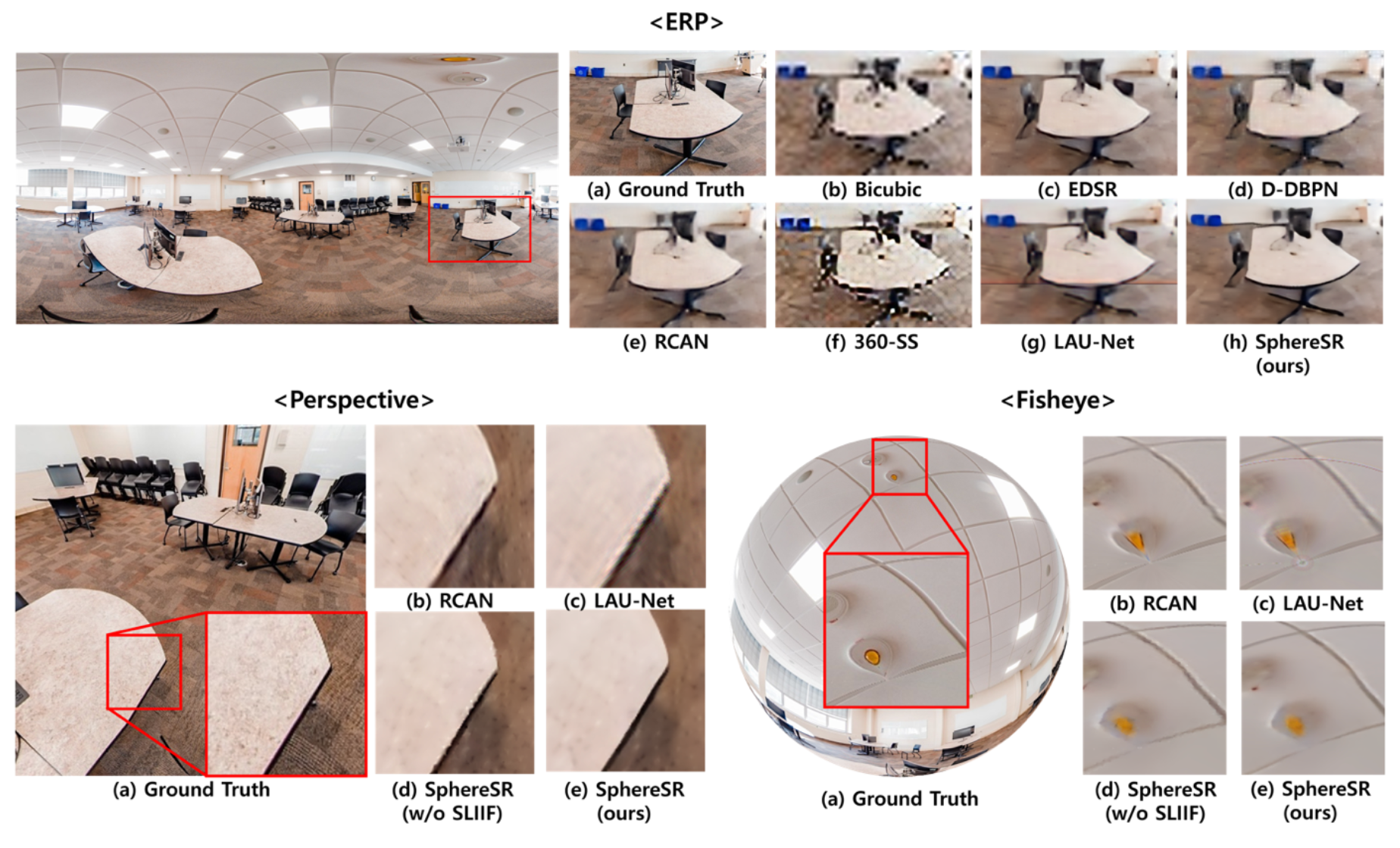

Recently, deep learning has brought a significant performance boost to 2D single image super-resolution (SISR), however, directly using these methods for 360° images represented in 2D planar representations is less applicable as the pixel density and texture complexity vary across different positions in 2D planar representations of 360° images. Furthermore, a 360° image can be flexibly converted into various projection types, as in real applications, the user specifies the projection type, direction, and FOV. Thus, it is vital to address ERP distortion problems and strive to super-resolve an ODI image into a high-resolution (HR) image with an arbitrary projection type rather than a fixed type.

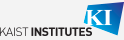

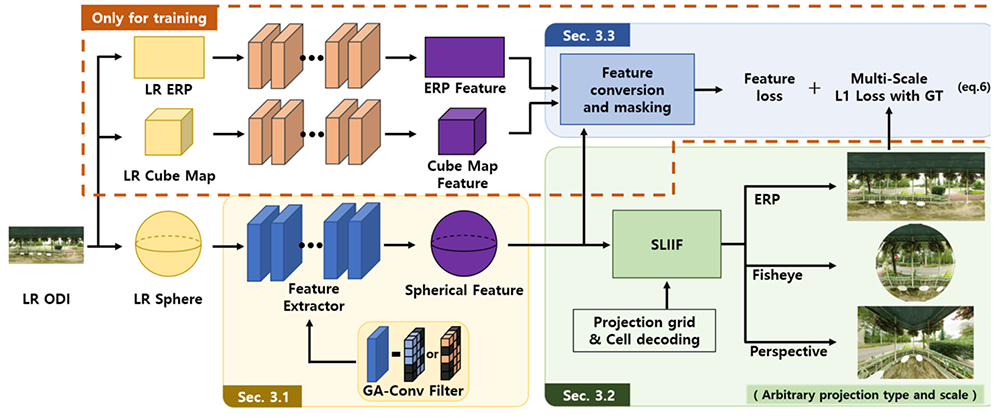

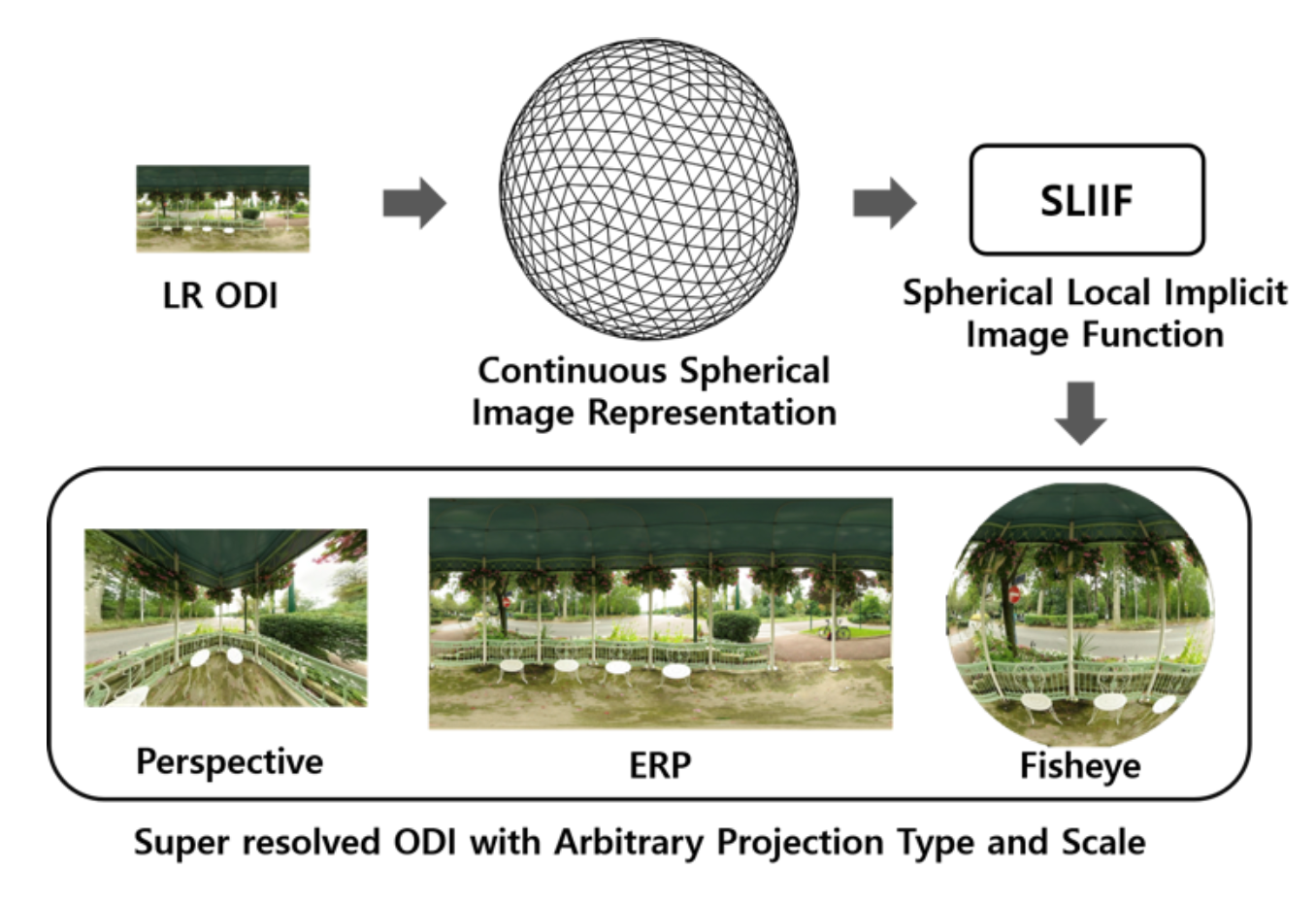

The research team of Prof. Kuk-Jin Yoon in the Department of Mechanical Engineering has developed a novel framework to super-resolve an LR 360° image into an HR image with an arbitrary projection type via continuous spherical image representation. They propose a feature extraction module that represents spherical data based on icosahedrons and efficiently extracts features on a spherical surface composed of uniform faces. As such, they solve the ERP image distortion problem and resolve the pixel density difference according to the latitude. They also developed a spherical local implicit image function (SLIIF) that can predict RGB values at arbitrary coordinates on a sphere feature map. SLIIF works on triangular faces, buttressed by position embedding based on normal plane polar coordinates to obtain relative coordinates on a sphere. Therefore, the method tackles a pixel-misalignment issue when the image is projected onto another projection. As a result, they can predict RGB values for any SR scale parameters.

This study was presented at the June 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), a top-tier international conferences on computer vision/machine learning. The results of this study can be applied to many real-world applications and systems including autonomous driving vehicles, drones, and robots that require omnidirectional image super-resolution.

This work was supported by a National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NRF- 2022R1A2B5B03002636).

Kuk-Jin Yoon Department of Mechanical Engineering, KAIST

Homepage: http://vi.kaist.ac.kr

E-mail: kjyoon@kaist.ac.kr