Much research on autonomous cars have become popular worldwide. Numerous automobile companies and Google, who is the leader in autonomous car research, have shown interest on this topic as well as many robotics researchers. The question they want to answer is “How will a car drive when it is without a human driver?” Professor Ayoung Kim and her research team at Korea Advanced Institute of Science and Technology (KAIST) focused on building a map for autonomous vehicles. For a car to fully drive by itself in the current road system, there needs to be a digital map that the car can read. There are maps in the navigation systems in cars and in apps on mobile phones. Will these be sufficient for autonomous cars in the near future? This is the question that the researchers would like to answer in collaboration with NAVER, a top search engine company that also provides mapping services in South Korea.

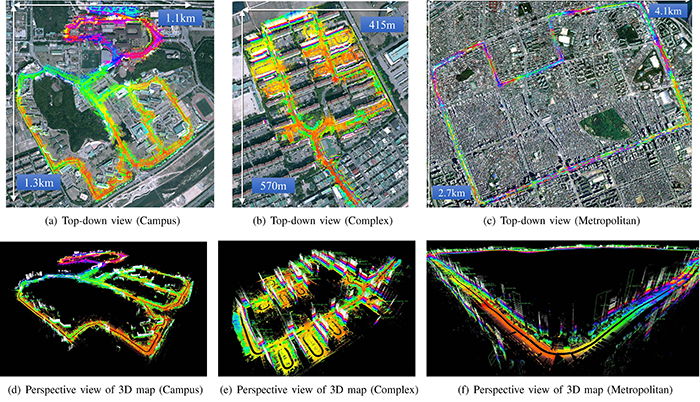

In order for cars to be autonomous, it needs two sets of information: map building, constructing what the environment looks like, and localization, knowing where the agent, in this case the car, is in the environment. The information of the environment relies heavily on the localization accuracy of the mapping agent. This problem is widely studied in robotics in Simultaneous Localization and Mapping (SLAM), which solves for the localization and mapping at the same time. SLAM uses sensors to map the environment and build a digital map for the autonomous car. In the process, sensor information is modeled so as to be compensated by each other. Existing digital maps are used. In collaboration with NAVER, Intelligent Robotic Autonomy and Perception (IRAP) focuses on the urban environment that has a highly complex and various structures when building a map. Professor Kim’s focus is to map a variety of urban environments using the SLAM technique from campuses to apartment complexes and even to a metropolitan city.

The sensor system used in this study consists of three LiDAR sensors, four cameras, a GPS, an IMU, analtimeter, and wheel encoders. As can be seen in Fig. 2, two LiDAR sensors are vertically rotated to capture line-by-line data of the environment. Motion of a base platform induces accumulation of lines, and 3D point cloud is obtained by stacking a sequence of point cloud lines. Professor Kim’s research team has proposed a platform to build a map using various sensors and aerial images. By June, IRAP will turn two cars in to mapping cars that can act as a SLAM platform and collaborate with NAVER in using existing maps to build a new representation of maps for the autonomous car.

IRAP has already built 3D maps for the urban environment in three different areas as shown in Figure 1 in 2015. The SLAM approach enabled the research team to maintain consistent localization and mapping from 9 to 15km of travel distance. By 2020, in collaboration with Professor Shim and his team from the Dept. of Aerospace Engineering, IRAP is aiming to build a map that is suitable for autonomous cars with 10cm accuracy. In 2016, first map validation will be conducted by using the KAIST map that was made for the autonomous car (Eurecar by Prof. Shim).